What’s New in AI Safety? Understanding Guardrails in 2025 Models

Introduction

Artificial Intelligence (AI) has experienced exponential growth in recent years. By 2025, advanced models like GPT-5, Claude, Gemini, and others are deeply integrated into healthcare, education, finance, legal systems, and more. While these advancements are revolutionary, they also introduce new challenges—especially around AI safety.

Why AI safety matters more than ever in 2025:

AI systems can generate biased outputs, hallucinate facts, or be exploited for harmful purposes. As AI becomes more powerful and widespread, the need for robust AI guardrails has never been more critical.

What you’ll learn in this article:

- Why AI safety has become a global priority in 2025

- What AI guardrails are and how they work

- New techniques for safeguarding AI

- Top companies leading in AI safety

- Key challenges and future developments

Who should read this?

AI developers, business leaders, tech policymakers, educators, and anyone interested in responsible, ethical AI innovation.

1. Why AI Safety Has Become a Priority in 2025

AI safety is a hot topic in 2025 due to several key developments:

- Rise of powerful generative models: Tools like GPT-5 and Claude are influencing sectors that affect human lives directly—like medical diagnostics, legal research, and education.

- Integration into critical systems: AI is no longer limited to chatbots or content generators. It now supports decisions in healthcare, financial forecasting, and public services.

- Public concern: Widespread issues such as misinformation, deepfakes, and bias have raised awareness about the need for strict regulations and proactive safety measures.

Keywords: AI safety 2025, risks of AI models, AI regulation

2. What Are Guardrails in AI?

AI guardrails are built-in safety mechanisms designed to ensure responsible and ethical behavior by AI systems. They act as boundaries that keep AI models from producing harmful, biased, or unsafe outputs.

There are two main categories:

- Training-time safety: Techniques such as dataset curation, human feedback, and value alignment used during model training.

- Deployment-time safety: Real-time moderation tools, content filters, and ethical guidelines applied when the AI is being used.

Types of AI guardrails include:

- Ethical constraints: Prevent harmful or offensive content generation.

- Content filters: Block outputs that contain unsafe, biased, or non-compliant material.

- Output moderation: Continuously review and evaluate AI responses before they reach the end user.

Keywords: AI guardrails, ethical AI systems, AI output filters

3. New Techniques in Guardrail Implementation (2025)

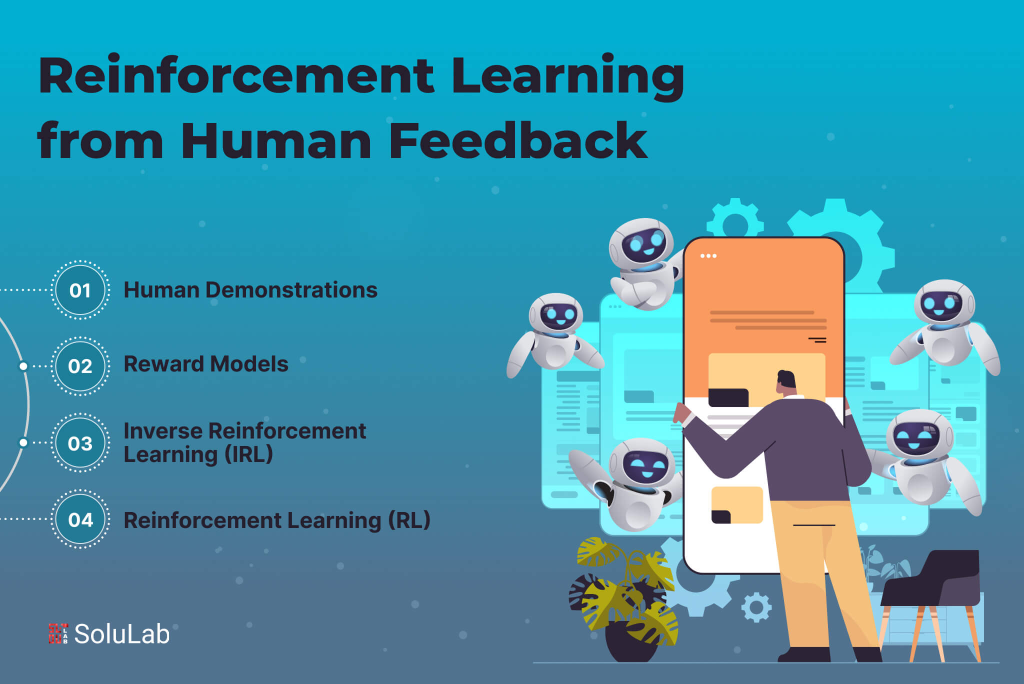

Reinforcement Learning from Human Feedback (RLHF)

RLHF remains a cornerstone for shaping model behavior. In 2025, it has evolved with enhanced feedback loops, involving more diverse and global human inputs to teach AI models what’s acceptable and what’s not.

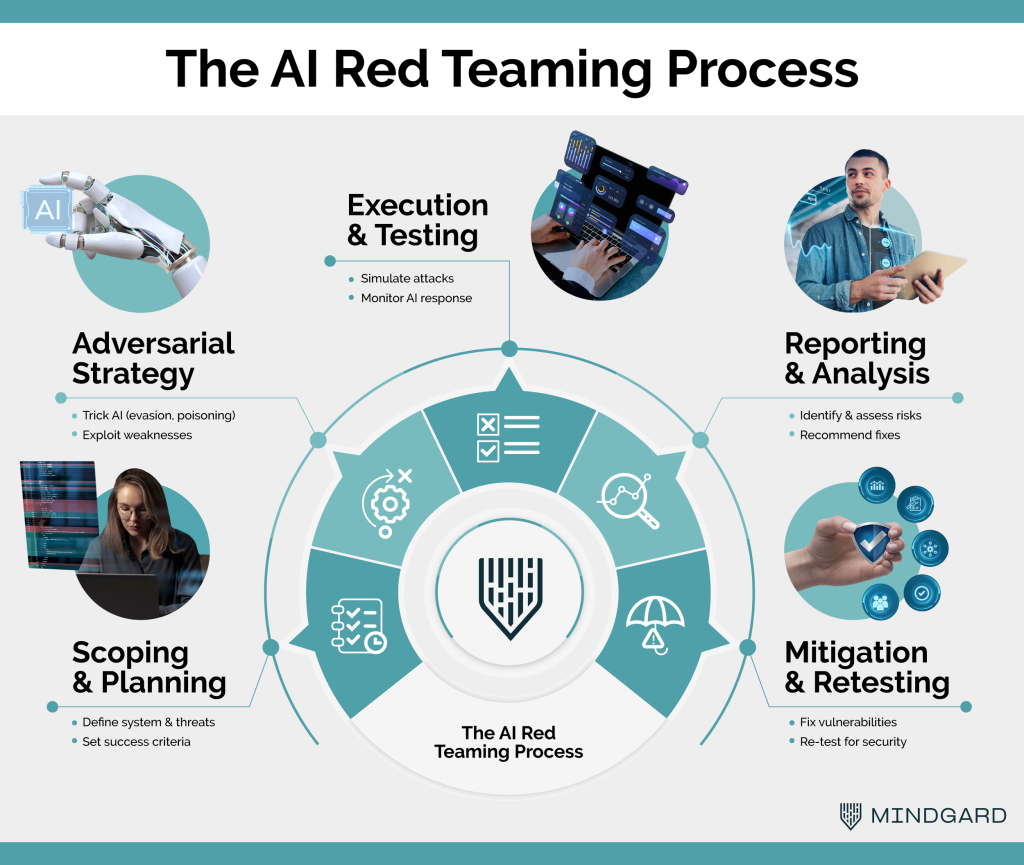

Red Teaming & Adversarial Testing

This involves exposing AI models to adversarial prompts to find and fix vulnerabilities. Regular red teaming ensures that AI systems can withstand misuse and manipulation in real-world scenarios.

Contextual Moderation Tools

Unlike older, static filters, new moderation systems now adapt to the user’s context. These tools adjust for cultural sensitivities, user intent, and conversational tone, allowing a more nuanced safety mechanism.

AI Self-Regulation & Constitutional AI

Leading models are now capable of self-regulating based on internally encoded “constitutions.” These principles allow AI to assess the ethical implications of its outputs and make decisions accordingly—without relying solely on rigid rules.

4. Companies Leading the Charge in AI Safety (2025)

Several organizations are setting new standards in AI safety and guardrail innovation:

- OpenAI: Focuses on red teaming, responsible disclosure, and continuous safety refinement for models like GPT-5.

- Anthropic: Innovators behind Claude, a model built on Constitutional AI principles that embed safety directly into its core.

- Google DeepMind: Known for cutting-edge research in model alignment, explainability, and ethical evaluations.

- Meta: Championing open-source AI with embedded safety layers that allow community contributions to guardrail development.

Keywords: AI safety leaders 2025, ethical AI companies, AI guardrail development

5. Challenges in AI Safety & Guardrails

While advancements are promising, guardrails face several limitations:

- False positives: Overblocking of safe and helpful content can hinder user experience.

- Performance trade-offs: Some guardrails may slow down AI responses or reduce their creativity.

- Cultural bias: Guardrails designed in one cultural context may misinterpret safe content from another, leading to unfair moderation.

Keywords: AI safety challenges, guardrail limitations, AI bias

6. What the Future Holds for AI Guardrails

Looking ahead, guardrails will evolve to become more intelligent, transparent, and aligned with global regulations:

- AI moderating AI: Autonomous systems may soon monitor each other, detecting rule violations or unsafe behavior in real-time.

- Regulatory compliance: Governments are introducing frameworks like the EU AI Act and India’s upcoming AI governance strategy.

- Open vs. closed source models: There’s an ongoing debate about whether open-source models can be made safer or if closed systems offer better control.

Transparency, auditability, and fairness will be the pillars of future AI safety systems.

Conclusion

AI guardrails in 2025 represent a major shift toward responsible innovation. As AI tools grow more powerful, ensuring they remain ethical, fair, and safe is essential.

Startups and tech giants alike are prioritizing AI safety to align with societal values and regulatory expectations.

We encourage developers, enterprises, and educators to adopt best practices and explore tools that promote AI safety and ethical AI development.Stay informed. Subscribe to HERE AND NOW AI for expert insights and resources on the future of AI.