How Nvidia’s Rubin Chip Is Reshaping AI Hardware Performance

Artificial Intelligence (AI) is evolving at lightning speed, driving demand for faster, smarter, and more efficient hardware. From large language models to real-time robotics, cutting-edge AI applications require immense compute power. Nvidia’s upcoming Rubin chip is set to be a game-changer in this space. As the successor to the Blackwell architecture, Rubin brings next-gen performance and efficiency, redefining AI hardware performance for 2026 and beyond.

In this blog, we explore how the Rubin chip is reshaping AI infrastructure, unlocking unprecedented power and sustainability for researchers, developers, and enterprises worldwide.

🔍 What Is the Nvidia Rubin Chip?

The Nvidia Rubin chip is a next-generation AI superchip platform expected to launch in 2026. It combines the new Rubin GPU with Nvidia’s first custom CPU, known as the Vera CPU, into a single superchip architecture. Designed to power future data centers, Rubin will deliver exceptional performance for AI training, inference, and edge deployment.

Key Specs at a Glance:

- Built on a cutting-edge 3nm process node

- Equipped with HBM4 memory (up to 288 GB per GPU)

- Delivers up to 13 TB/s memory bandwidth

- Supports FP4 and FP8 low-precision compute for AI inference and training

- Features NVLink 7 interconnects offering up to 260 TB/s bandwidth

This architecture will support a wide range of AI workloads—from language models and simulations to autonomous robotics and virtual environments.

🚀 Why the Nvidia Rubin Chip Matters for AI Hardware Performance

Modern AI models like GPT-4 and beyond demand exponential increases in computational power. Current hardware solutions are reaching their performance ceiling. Nvidia’s Rubin architecture introduces a fundamental leap forward to support the growing complexity and scale of AI.

1. Massive Performance Gains

Rubin offers over 3x the compute power of Blackwell-based systems. A single Rubin superchip is capable of delivering up to 1.2 exaFLOPS of FP8 performance and 3.6 exaFLOPS of FP4 inference. This makes Rubin ideal for training large-scale AI models and real-time inference at unprecedented speeds.

2. Lower Energy Consumption

With power-hungry AI workloads becoming more common, energy efficiency is crucial. Rubin is built to provide significantly more performance-per-watt, making it a more sustainable choice for data centers and supercomputers. Expect better throughput with far less power draw, reducing both operational costs and environmental impact.

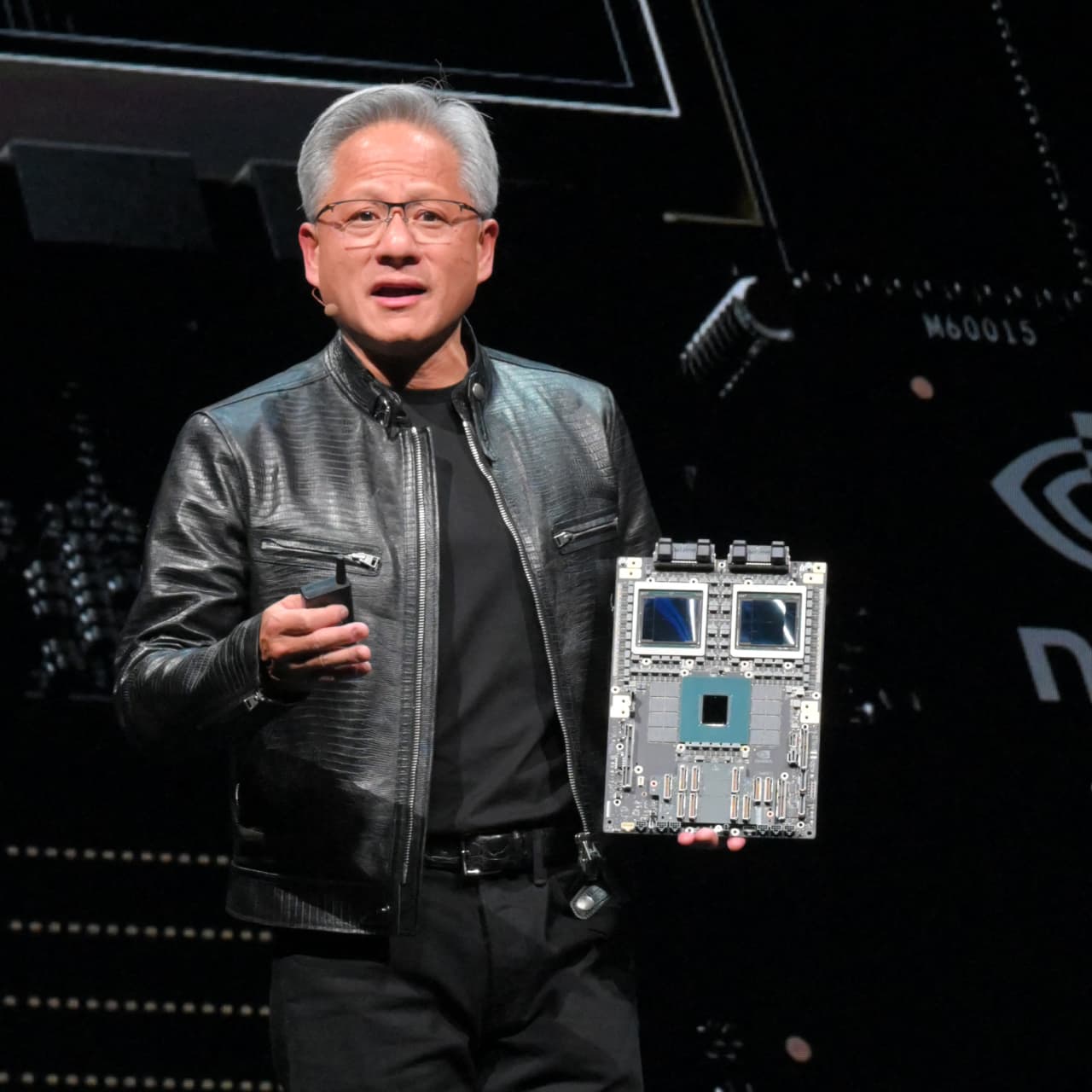

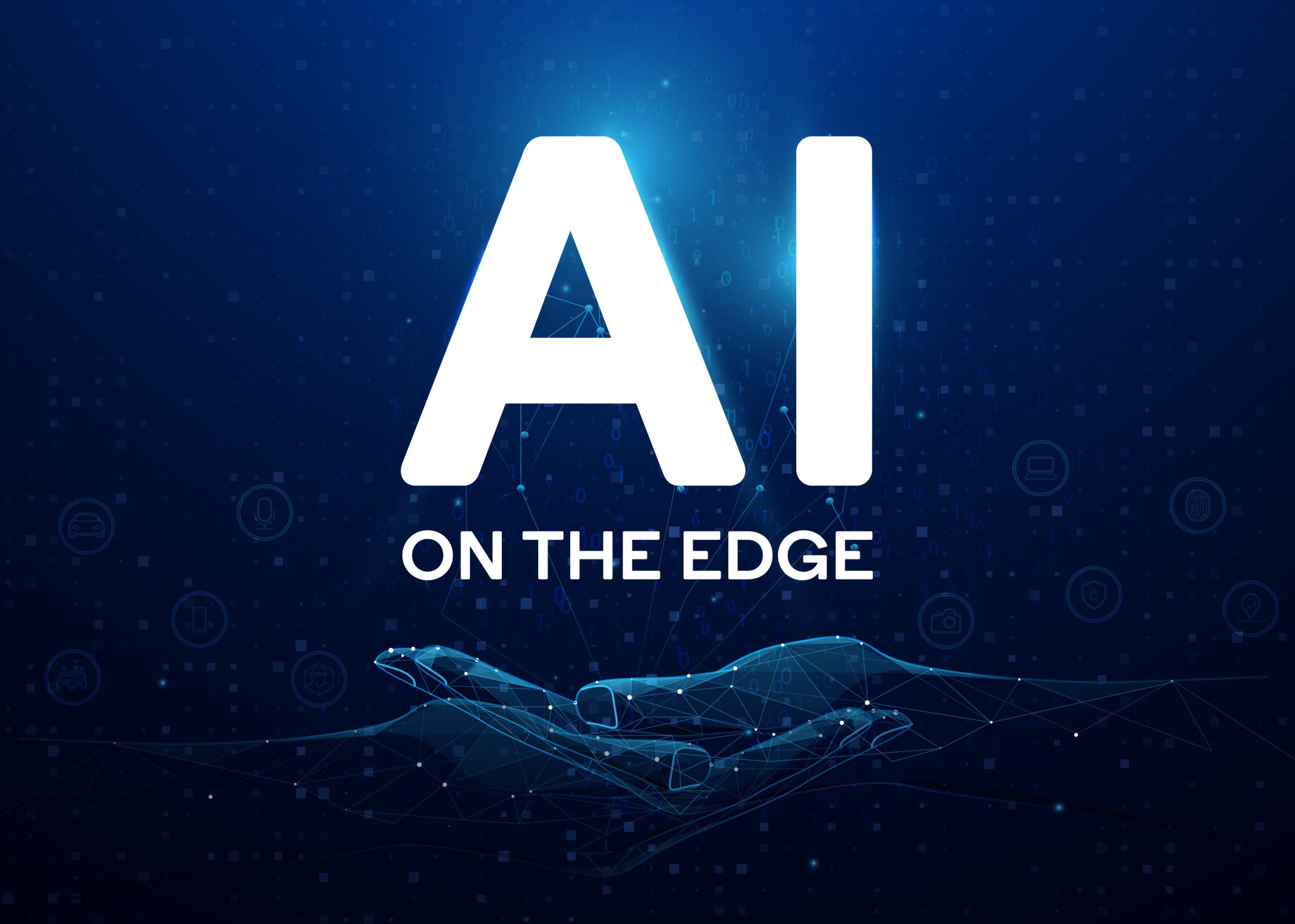

3. Support for AI at the Edge

Edge AI is becoming a priority as more intelligence moves into devices like smartphones, drones, and robots. Rubin is expected to be available in specialized, compact variants, bringing powerful AI capabilities to edge environments while maintaining high efficiency.

🔗 Rubin and the Future of AI Infrastructure

Rubin isn’t just a new chip—it’s part of a broader AI ecosystem designed by Nvidia. When released, it will integrate seamlessly with other Nvidia technologies and tools:

- Spectrum-X networking systems for high-bandwidth AI data centers

- Next-gen CUDA libraries tailored for Rubin’s architecture

- DGX systems and SuperPOD clusters powered by Rubin for scalable AI deployment

- Dynamo AI OS, designed to orchestrate massive inference workloads across Rubin GPUs

Together, these components create a powerful and scalable AI platform that supports everything from research labs to commercial cloud providers.

🧠 Impact on AI Development and Research

Rubin will significantly empower:

- Researchers building larger, more accurate models faster than ever

- Startups deploying cost-effective AI services using Rubin-powered infrastructure

- Cloud providers offering highly efficient AI compute instances for their customers

- Industries like healthcare, finance, and manufacturing, which rely on low-latency, high-accuracy inference

Rubin’s low-precision compute modes (like FP4 and INT4) are ideal for high-volume inference tasks, which dominate real-world AI applications. This makes scaling AI more affordable and accessible, even for smaller companies.

📊 Comparison: Rubin vs. Blackwell vs. Hopper

| Feature | Hopper (2022) | Blackwell (2024) | Rubin (2026, expected) |

| Process Node | 4nm | 3nm | 3nm or smaller |

| Peak FP8 Performance | ~1.3 PFLOPS | 4 PFLOPS | 6+ PFLOPS (projected) |

| Inference Throughput | Moderate | High | Extremely High |

| Memory Bandwidth | ~3.5 TB/s | ~8 TB/s | ~13 TB/s |

| AI Optimization | Early-stage | Mature | Revolutionary |

| Energy Efficiency | Moderate | High | Extremely High |

This table clearly shows Rubin’s leap in AI chip performance and its potential to become the gold standard for AI hardware by 2026.

🧩 Final Thoughts

The Nvidia Rubin chip isn’t just an upgrade—it’s a strategic milestone in AI hardware design. With triple the throughput, enhanced power efficiency, and support for real-time and edge AI applications, Rubin positions Nvidia to lead the AI race into the next decade.

Whether you’re a researcher training the next GPT-like model, a business building enterprise-grade AI tools, or a developer looking to scale real-time applications, Rubin is the future-ready hardware you’ve been waiting for.

Stay connected with us on HERE AND NOW AI & on